Crush Your Next Interview with LLMs: A Technical Guide to Smarter Prep

How to use language models to generate questions, evaluate answers, and simulate behavioral interviews.

Interview prep is brutal. Scouring LeetCode for fresh problems, grinding through repetitive coding challenges, and rehearsing "Tell me about a time…" answers can feel like shouting into a void. But what if you had an on-demand coach that generates technical questions, critiques your solutions, and even roleplays as an interviewer?

Large Language Models (LLMs) like GPT-4 and DeepSeek are changing the game. In this guide, I’ll show you how to automate the grunt work of interview prep with code snippets you can adapt today. Let’s dive in.

Get the entire code with a WebUI on my Github

Prerequisites:

Python installed on your machine

An OpenAI (or your LLM of choice) API key

Basic familiarity with Python programming

Code editor of choice

Step 0: Setting Up the OpenAI Package and API Key

Before we jump into the code, let’s set up your environment.

1. Install the OpenAI Package

Run this command in your terminal to install the official OpenAI Python package:

pip install openAI 2. Store Your API Key Securely

Never hardcode your API key in your scripts. Instead, store it in an environment variable. Here’s how:

On macOS/Linux:

Open your terminal and edit your shell configuration file (e.g.,

.bashrc,.zshrc, or.bash_profile):nano ~/.zshrc

Add this line to the file:

export OPENAI_API_KEY="your-api-key-here"

Save and exit, then reload the file:

source ~/.zshrc

On Windows:

Open Command Prompt and run:

setx OPENAI_API_KEY "your-api-key-here"Restart your terminal or IDE for the changes to take effect.

Accessing the Key in Python:

Use the os module to retrieve the key securely:

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY") Now you’re ready to use the OpenAI API in your scripts!

1. Generating Technical Questions (No More LeetCode Burnout)

The Problem: LeetCode’s question bank is finite. After solving 50 binary tree problems, your brain starts recycling solutions.

The Fix: Use an LLM to generate endless, role-specific questions. Here’s a Python script to automate it:

Sample Output:

"You are given an array of integers where each element represents the maximum jump length from that position. Determine if you can reach the last index starting from the first.

Sample input: [2,3,1,1,4] → Output: True

Sample input: [3,2,1,0,4] → Output: False"

Pro Tip: Refine your prompts for specificity:

"Generate a Google-style system design question about designing YouTube."

"Give me a Python question that tests recursion with strings."

2. Evaluating Your Code Like a Senior Engineer

The Problem: You wrote a solution, but is it optimal? Did you miss an edge case?

The Fix: Feed your code to the LLM for line-by-line feedback. This script analyzes time complexity, readability, and more:

Sample Feedback:

"Time complexity: O(n) ✅. Space complexity: O(1) ✅. However, this fails if the input is None. Add a check for

if not head: return None. Alternative approach: Recursion (but O(n) space)."

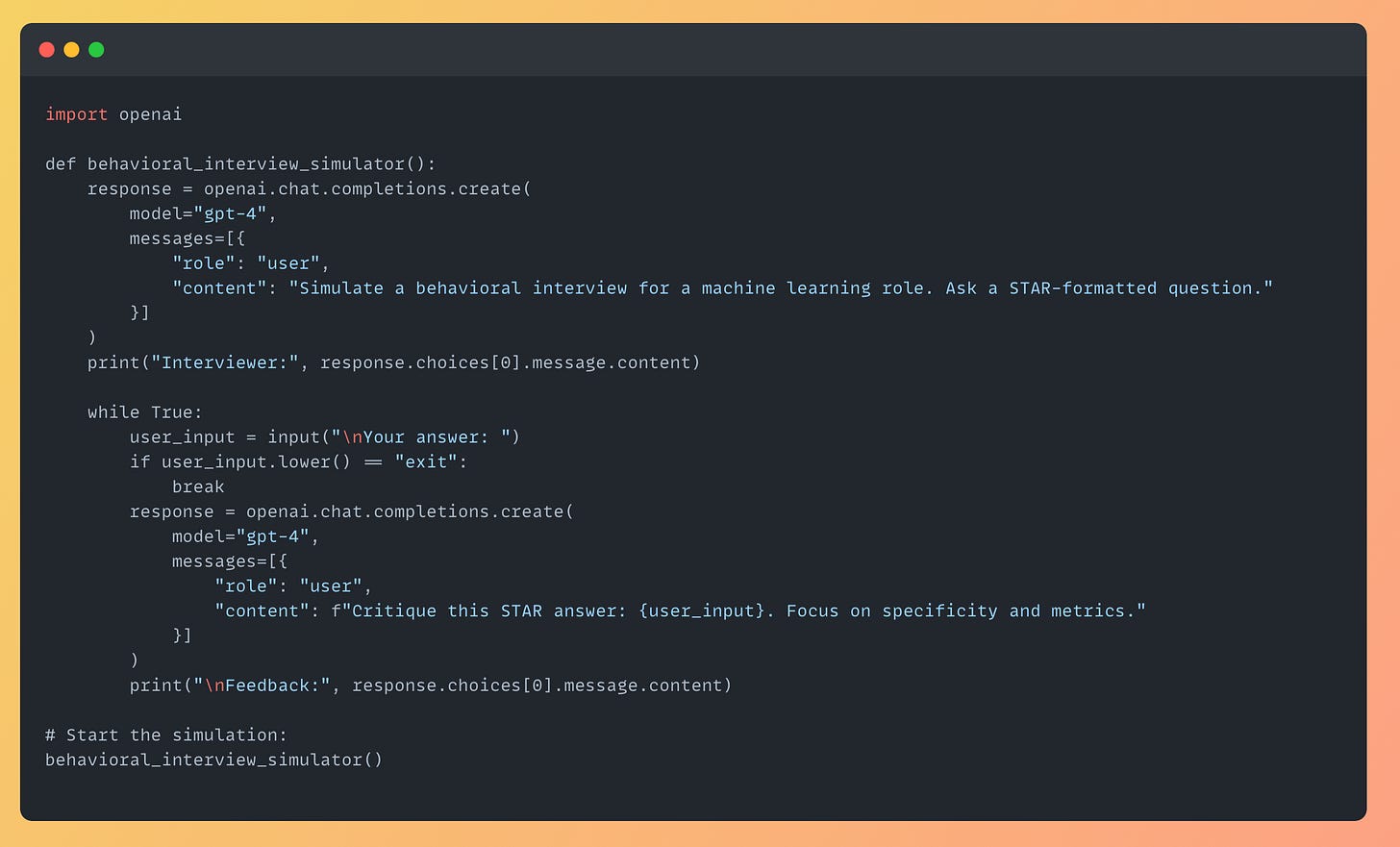

3. Behavioral Interview Simulator: Practice STAR Stories

The Problem: Behavioral questions catch even seasoned engineers off guard.

The Fix: An interactive script where the LLM acts as an interviewer, grilling you in real time:

Sample Interaction:

Interviewer: "Describe a time you improved a model’s performance. What was your action and the measurable outcome?"

Your answer: "I tweaked hyperparameters and got better accuracy."

Feedback: "Too vague! Specify which hyperparameters (e.g., learning rate, batch size) and quantify the improvement (e.g., 'accuracy increased from 82% to 89%')."

Caveats and Pro Tips

Fact-Check Everything: LLMs hallucinate. Cross-validate technical answers with official docs.

Privacy First: Never paste sensitive code (e.g., from your employer) into public APIs.

Prompt Engineering:

For role-specific prep: "You are an Amazon principal engineer. Ask me a system design question about distributed systems."

For deeper feedback: "Grade my answer on clarity (1-5), specificity (1-5), and technical depth (1-5)."

Conclusion: Pair LLMs with Human Judgment

LLMs are accelerators, not replacements. Use them to generate questions and spot blind spots—then practice with real humans to polish delivery.

Next Steps:

Find all the code in this post here.

I made a webUI based AI Interviewer, get the entire code and how to use it here.

Got questions? Hit reply or leave a comment. Now go crush those interviews!

If you liked this article, consider subscribing to my substack!